Stark kept going on, adding new capabilities to his armour, reaching peak performance with the Model Prime and finally calling it a day with the Mark 85. (More like Captain Marvel blasted him in Civil War 2 or the Gauntlet irradiated him, based on the cinematic or comic universe you prefer).

Computer Vision is a field with rapid progress; new techniques and higher accuracy coming out from various developers across the planet. Machines now have human-like perception capabilities, thanks to Deep Learning; with the ability to not only understand and derive information from digital image media but also create images from scratch with nothing but 0’s and 1's.

How did it begin?

Time and again, the higher tech-deities bring me at a point in this space-time continuum where I am faced with a conundrum. My team and I, back in our final year of college, were building a smart wearable for people with impaired vision, an AI-enabled extension of sorts to help the user with recognizing objects, recognizing people, and performing Optical Character Recognition; we called it Oculus. In all honesty, we did not rip it off from Facebook’s, Oculus Rift VR Headset and it was purely coincidental. The AI Engine was comprised of a multitude of classifiers, object detectors and image captioning neural networks running with TensorFlow and Python. With my simpleton knowledge of writing optimized code, everything was stacked sequentially, not allowing us to derive results in real-time, which was an absolute necessity of our wearable. Merely by running the entire stack on the GPU and using concurrent processes, I was able to achieve 30fps and derive real-time results.Ratcheting my way through

Fast forward two years to the present, I currently work as an AI Architect at Integration Wizards. My work predominantly revolves around creating a digital manifestation of the architecture I come up with for our flagship product — IRIS.The two primary hindrances were the API based architecture being a bottleneck under higher loads and the Object detection neural networks being heavy. For this, I needed something better, a better queue and processing architecture along with faster neural nets. Googling and surfing Reddit for a couple of days, I came across Apache Kafka, a publisher-subscriber message queue that is used for high data traffic. We retro-fit the architecture to push several thousand images per second from the CCTVs to the neural networks to achieve our analytical information. We devised another object detection model that was anchor-less and ran faster while retaining performance. Of course, the benchmark was against the infamous COCO dataset.

This increased our processing capability close to 200 fps on a single GPU.

The Turning point

Yes, you guessed it, I didn’t stop there. I knew that there was much more fire-power I could get; accessible but hidden in the trenches of Tensor cores and C++ (such a spoiler). The deities were calling me and my urge to find something better kept me burning the midnight fuel. And then, the pandemic happened.

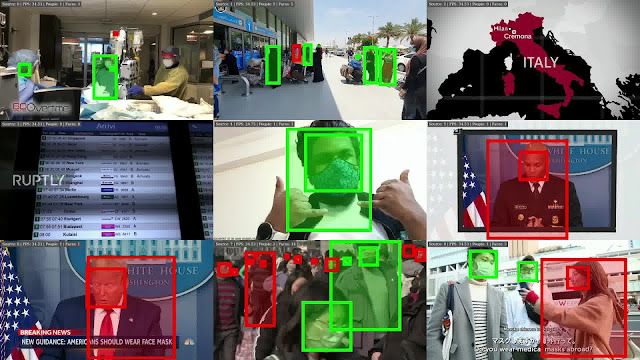

Hitting 1000 with Mask Detection and Social Distancing Enforcement

By now, I had a few tricks up my sleeve. IRIS’ pipeline now harnesses elements of GStreamer, which is an open-source, highly optimized, image/video media processing tool. TensorRT is something we used to speed up our neural networks on NVIDIA’s GPUs to properly utilize every ounce of performance we could push out. The entire pipeline is written with C++ with CUDA enabled code to parallelize operations. Finally, light-weight models — the person detector uses a smaller ResNet-like backbone and our Face Detector is just 999 kilobytes in size with a 95% result on the WiderFace dataset. Our person detector and Face Detector are INT8 and FP16 quantized making them much faster. With quantization and entire processing pipeline running on the GPU, amalgamating these together, IRIS’ new and shiny COVID-19 Enforcer ran at 1000 fps at peak performance for Social Distancing and 800fps for both Social Distancing and Mask Detection.So what’s next?

I am not done. Achieving one milestone allows me to mark a bigger and better goal. Artificial Intelligence is in its infancy and being at the forefront of making it commercially viable and available in all markets, especially India has been mine and my organization’s vision. The endgame is to have AI for all, where people, be it developers or business-owners, have the ability to quickly design and deploy their own pipelines.IRIS aims at being a platform to precisely empower individuals with that, with the intention to democratize Artificial Intelligence, making it not a luxury for the few, rather a commodity for all.